What did we select?

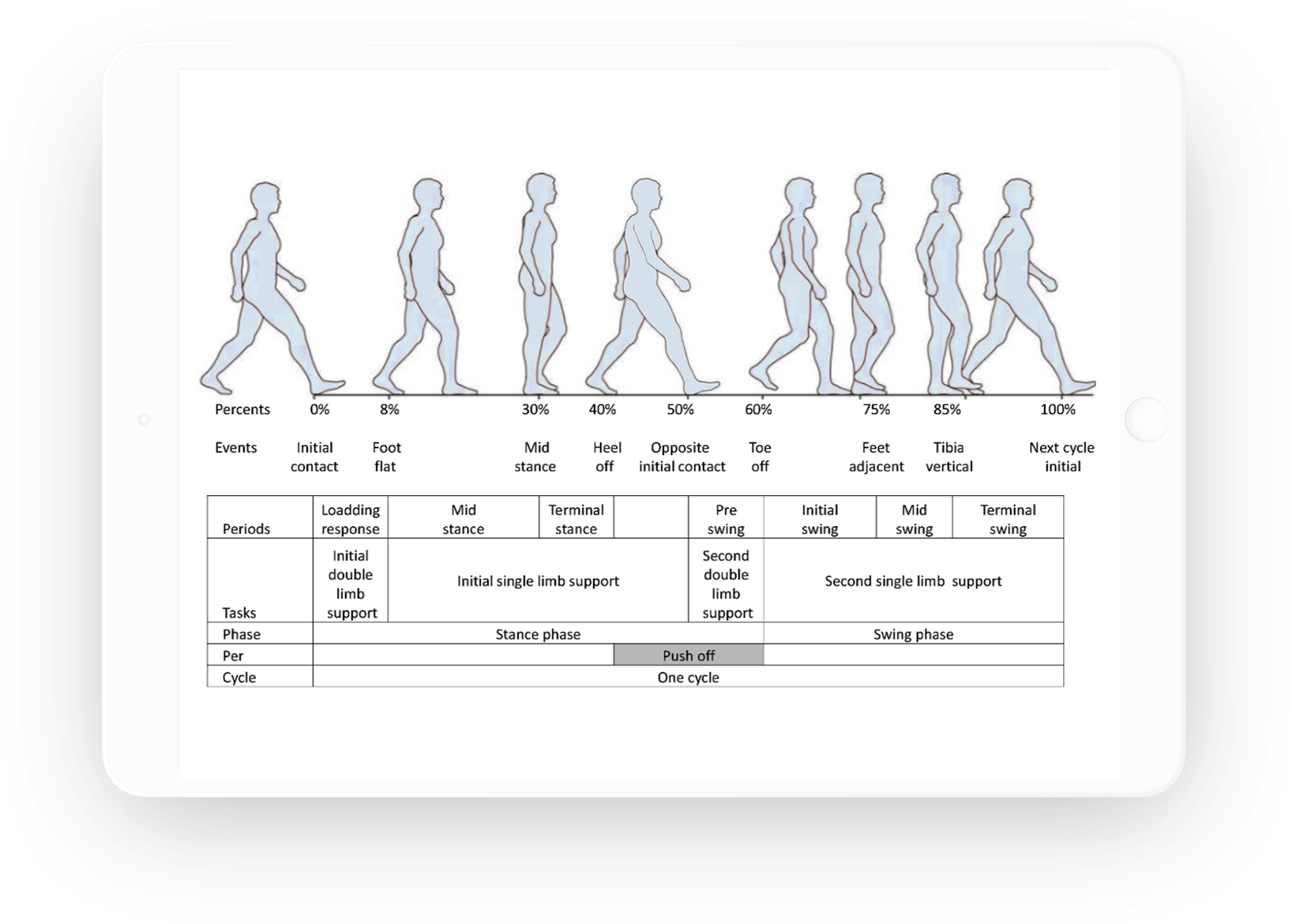

The National Institutes of Health (NIH)

4-Meter Gait Test is a gait test commonly used to assess

walking speed over a short distance. Gait

involves dynamic postural control, where the

individual changes their base of support

throughout the movement. Gait slowing

effects have been shown to be remarkably

reliable and durable over time (Parker et

al., 2006).

The 4-meter gait test has proven to be

remarkably reliable, consistently yields

some of the highest reliability

coefficients, with inter- class correlation

coefficients (ICC) values of .96 to .98 for

adults (Peters et al., 2013) and ICCs of .81

for adolescents (Alsalaheen, 2014).

Why we selected it

The research has demonstrated the NIH

4-meter test’s strong characteristics:

Test-Retest Reliability:

0.96 to 0.98 for adults (Peters et al.,

2013); 0.81 for adolescents (Alsalaheen,

2014)

Normative Data:

stratified by age and gender (National

Institutes of Health)

-

Age range: 5-85 years from NIH Toolbox

(Kallen et al., 2012)

- Gender: Male and female

No documented ceiling or practice effects

with repeat testing.

What did we select?

The Balance Error Scoring System (BESS) is an assessment to measure static balance

and postural control. Postural control is an

individual’s amount of sway, with greater

neuromotor control associated with less

postural sway.

The full BESS has been shown to have

reliability ranging from moderate to good

(Chang et al., 2014), with reliability

coefficients of .7 achieved in a sample of

children and adolescents, when using

separate norms for men and women (Mcleod et

al., 2006; note: test–retest values of more

than .90 can be obtained when taking the

average of multiple BESS administrations in

one day; Broglio et al., 2009.)

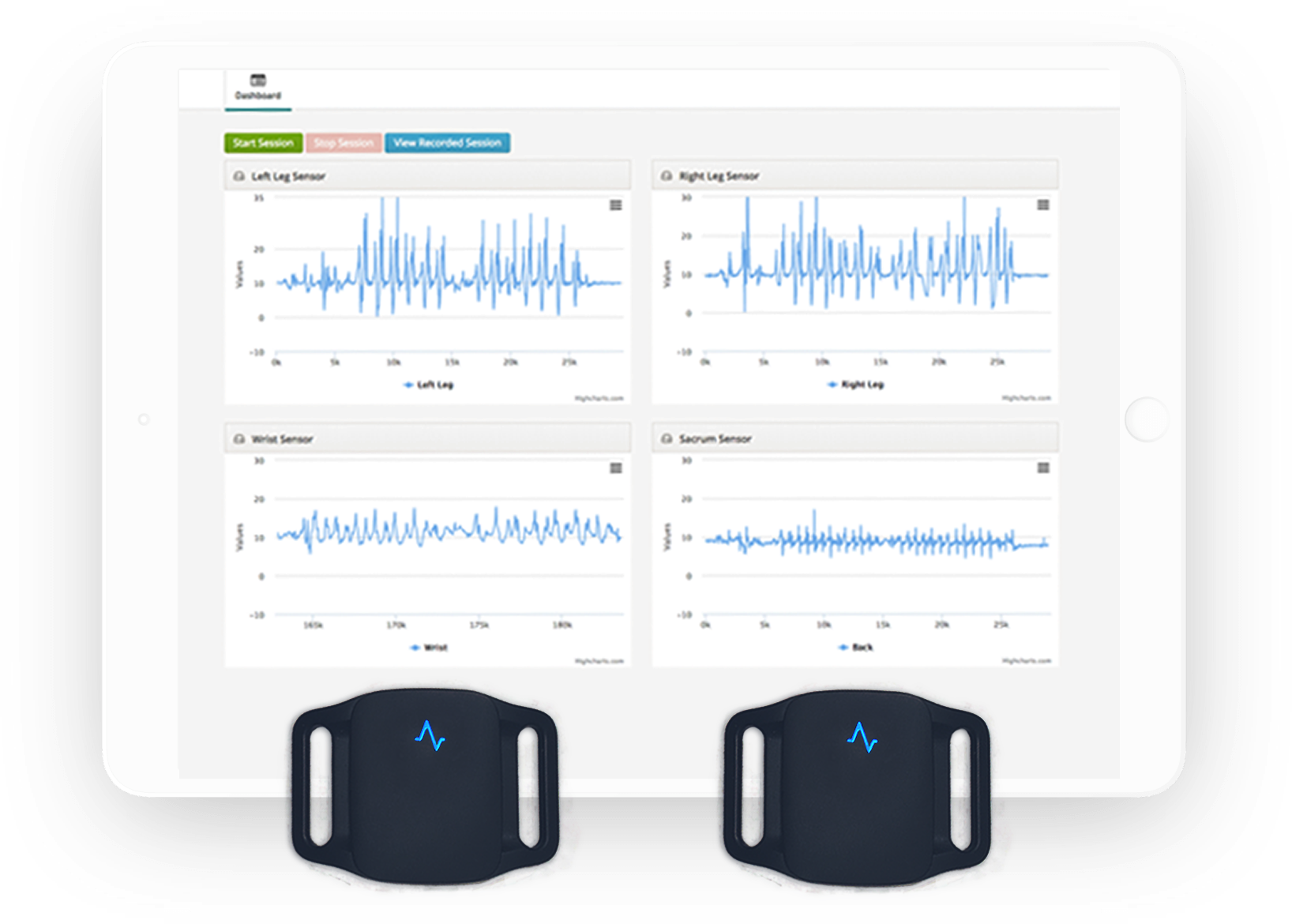

The computerized BESS, which automates and

facilitates the delivery and timing of the

full BESS, was more recently developed to

provide a more objective and quantitative

method of assessing balance errors.

Comparisons of the computerized BESS to

standard scoring procedures have shown that

computerized BESS scoring is more sensitive

in its measurements of performance and

postural stability than scores calculated

from traditional motion capture data alone

(Alberts et al., 2014). Additionally,

inter-rater reliability between computerized

and standard human-calculated BESS scores

have been found to range from fair to

excellent (0.44-0.99) across the six main

stances (Caccese & Kaminski, 2014; cf.

Houston et al., 2019). With respect to

validity, the computerized scores are

generally equivalent to human-rated scores

across balance conditions (Glass et al.,

2019).

Why we selected it

The following research has demonstrated

strong characteristics of the BESS:

Test-Retest Reliability:

0.70-.90 in children and adults (e.g.,

Mcleod et al., 2006)

Normative Data:

stratified by age and gender (e.g.,

Iverson & Koehle, 2013).

-

Age range: 5 to 23 stratified by age and

gender, age 24+ stratified by age (e.g.,

Iverson & Koehle, 2013).

- Gender: Male and female

No documented practice or ceiling effects.